A Disaster Recovery (DR) plan should be a part of your security governance framework. You will need to make sure you can safely and completely recover IT-systems and business-critical data in the case of data loss, system failure of a machine or even a complete cloud compromise such as in the case of a ransomware/ cryptoware incident. Ransomware is the most significant malware threat of 2019. About 75% of companies infected with ransomware were running up-to-date endpoint protection. Also, ransomware costs businesses more than $75 billion dollars per year. This alone should be a good enough reason to have a DR plan in place.

Secure backups should also be in place in order to pass PCI-DSS and HIPAA audits and to comply with other industry requirements. In case of an emergency incident, you should have a DR plan in place to restore your backup. This means you have to monitor and test your backups and backup processes.

Backup methods

It is bad practice to rely on a single backup solution and a single backup destination location. You do not want this backup destination to be inaccessible or corrupt during a backup recovery procedure. Also, what if the backup method you have in place appears to be not working or broken? The best way to prevent such a disastrous situation from happening is by monitoring your backup methods and by having multiple backup methods with multiple secure backup locations in place. You should find a good balance between having multiple backup destinations in place versus storing your data at too many (third party) data locations due to possible security complications.

In this blog post, we will elaborate on four free industry-standard backup methods for your default httpd, Apache, nginx or lighthttpd web directory /var/www/htdocs/ on a Virtual Private Server (VPS) running OpenBSD 6.6 hosted on Vultr.com, a fine alternative to DigitalOcean. These backup methods should also work with little to no modification on Linux distributions such as Ubuntu, Red Hat and Debian:

1) Synchronise the web directory to another directory on a VPS over SSH

2) Copy the compressed web directory in an encrypted AES256 zip file to another VPS over SSH

3) Leverage GitHub as a VCS to synchronize the web directory over HTTPS (TLS)

4) Snapshot the VPS by an API over HTTPS (TLS)

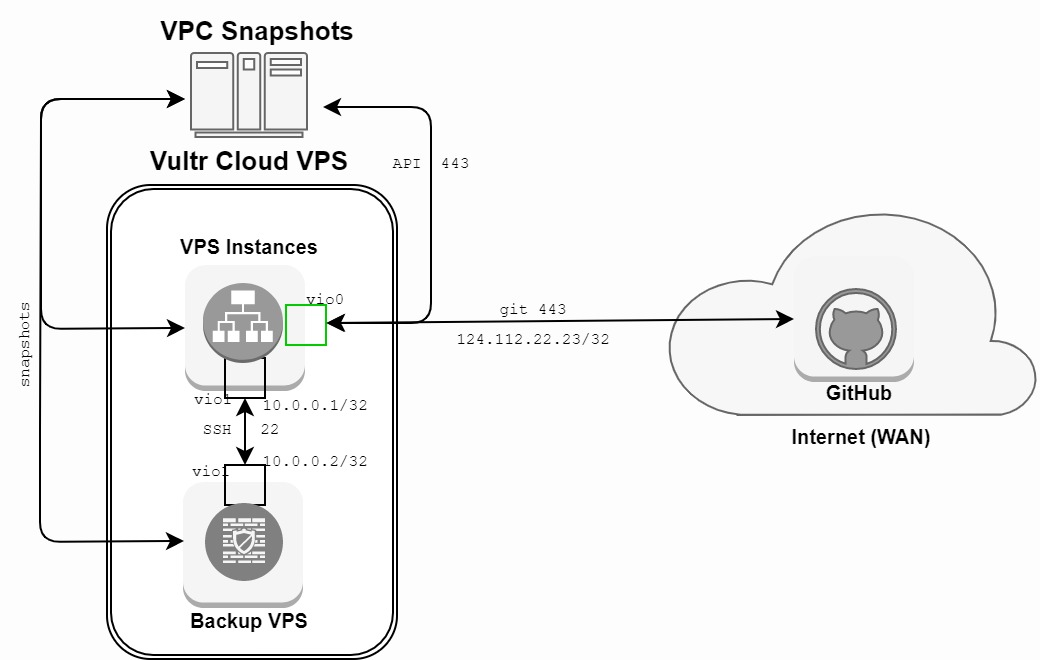

All backup scripts can be found on the following GitHub repository. Place these scripts in a responsible directory. Do not run these scripts under a root user. Also, do not concatenate these scripts. This way you reduce the likelihood of a failure. We create cron jobs for each individual backup method to automatically execute on a daily basis between 3 and 6 o'clock at night. Figure 1 shows the high-level architecture of the backup methods and their respective backup locations:

Figure 1: System architecture of the backup mechanisms

1. rsync Backup

We start off by diving into rsync. rsync is ideal to synchronize complete folders to another VPS. rsync leverages the SSH protocol to encrypt data in transit. Instead of using SSH password authentication, we create a new SSH key-pair for backup purposes. This way we can easily rotate and audit SSH sessions for backup purposes versus authenticated user actions. rsync will only synchronize modified files and thus only sends files that differ from the systems.

Make sure this target machine is isolated from the network. Alternatively, you could also use a virtual object S3 storage bucket as a destination target instead of a VPS machine.

2. Encrypted zip backup over scp

Our second backup method copies an encrypted zip file to another machine. Instead of copying every file in the httpd directory, we compress this directory and transfer it compressed in a zip file over scp. scp also leverages SSH for encrypting the network connection. A second layer of network encryption could be added such as implementing a VPN or IPsec session if you need to backup over the WAN. We choose to scp over to a local machine.

Keep in mind the data is now encrypted in transit, but not at rest on binary level. We encrypt the zip file with LibreSSL with an AES256 symmetric key. Using symmetric encryption instead of public key encryption works just fine if you do not need to share backups among more than one entity.

First check which version of OpenSSL you are using. Switch to GnuPG if you have OpenSSL instead of LibreSSL installed on your machine. LibreSSL is forked from OpenSSL by the OpenBSD team with the goal of improving by removing unsafe APIs and reducing the code-base.

$ openssl version

LibreSSL 3.0.2

Next, we leverage the /dev/urandom source for an acceptable level of randomness and entropy to generate an EAS256 key consisting of 521 random [a-z][A-Z][0-9][!@#$%^&*_-] characters. Rotate this key at least once a year.

$ tr -dc '[:alnum:]!@#$%^&*_-' < /dev/urandom | fold -w 256 | head -n 1 > backup.key

$ cat backup.key

%__b*_bK0m58jsq0C5L&kFm2DenkG1vsrhV$HSYl_1gXzz7MytL7Aja_4FNOfSdm^V3wiaMwdundxmPizMXR2%jpC3ZnV-mvE2#ZN*yI4^4aq3gCB&N$2qNpGFULf!omkAPYEP25wIv-4Nh^HC@Wb$2F9HSRqw-VAgjl2F2dLqpeYxo!!GT@L@N7coK7!!59tU*_gqXo_#uyv-$ESx1jG%*BDvn_O$p#*4IY78B4EVYjiwnjyMKAv8Cz3zupH7ax$

chmod 400 backup.key

A reasonable level of sufficient entropy can be lacking when you spawn a fresh VPS, VM or container. In this case, you do not want to generate randomness from your system activities such as disk, network, and clock device interrupts, also referred to as Pseudo-Random Number Generator (PRNG). Alternatively, you can get your randomness from a Hardware Security Module (HSM) or a True Random Number Generator (TRNG) USB stick. These USB sticks generate noise from their high voltage which results in semiconductor noise, also known as avalanche noise.

A little side note - AES-256-GCM is unfortunately not working with the OpenSSL and LibreSSL enc parameter. This is why we use AES-256-CBC instead. The following code block can be used to encrypt and decrypt the compressed zip file. Please that you can and should add an authentication tag (AEAD) while encrypting. age (actually good encryption) can do all of this for you instead. Read this blog post for more information.

$ openssl enc -in backup.zip -out backup.zip.enc -pass file:backup.key -e -md sha512 -salt -aes-256-cbc -iter 100000 -pbkdf2

$ openssl enc -in backup.zip.enc -out backup.zip -pass file:backup.key -d -md sha512 -salt -aes-256-cbc -iter 100000 -pbkdf2

The following script is used to copy the encrypted zip file to another machine:

3. GitHub synchronization

GitHub offers a free private repository that functions as a Version Control System (VCS). This means GitHub will present us with a change log through commits of which files exactly changed. Like rsync, our GitHub backup script will only upload files to the private GitHub repository which have been edited, created and/or deleted. Keep in mind that git does not automatically preserve file system metadata such as file permissions and other attributes when restoring data. This script synchronizes files over TLS and stores files in plain-text on GitHub:

4. Automated snapshots

Vultr and other public cloud providers such as AWS and DigitalOcean provide an API to orchestrate your systems from a so called Infrastructure as Code (IaC) perspective. Vultr runs their VMs on a KVM (Kernel-based Virtual Machine) hypervisor. The following script creates automated snapshots and deletes the oldest snapshot if it exceeds the limit of 11 free snapshots:

Backup monitoring

All backup scripts are embedded with the logger command. This will create an entry in the syslog system audit trail whether or not our backup are completed successfully or failed.

$ crontab -e

0 3 * * * /home/username/backup-rsync.sh

0 4 * * * /home/username/backup-zip.sh

0 5 * * * /home/username/backup-github.sh

0 6 * * * /home/username/backup-vultr-snapshot.sh

$ cat /var/log/messages | grep " Backup]"

[...]

Nov 29 03:00:02 cryptsus username: [OK - Backup] rsync backup successful

Nov 29 04:00:04 cryptsus username: [OK - Backup] SSH zip backup transfer is successful

Nov 29 05:00:02 cryptsus username: [OK - Backup] GitHub backup successful

Nov 29 06:00:04 cryptsus username: [OK - Backup] Creating Vultr snapshot for VPS: 'web01-p-cryptsus' with SUBID: '43424578'

Nov 29 06:00:05 cryptsus username: [OK - Backup] Creating Vultr snapshot for VPS: 'web02-p-cryptsus' with SUBID: '14435351'

[...]

We can leverage rsyslog to send these logs to a centralised SIEM (Security Information and Event Management) system such as ELK Stack (Elasticsearch, Logstash and Kibana). Next, we can create alerts based on these log entries. This means we can get a notification when a backup fails.

On the target backup VPS we configure a retention time of 180 days with cron. This way, encrypted backups older than 180 days will be automatically purged/deleted to save disk space:

$ crontab -e

0 7 * * * find /home/username/backup-zip -type f -mtime +180 -name "*.zip.enc" -exec rm {} \;

Considerations

Placing individual backup scripts and configuring crontabs for every machine is not scalable. Look into Ansible, an automation provisioning tool which piggy bags over SSH. This way we create in essence transparent Backup as Code (BaC) building blocks. You can also leverage CI/CD pipelines for this purpose.

Lastly, look at your business risks, possible security threats, availability risks and legal and audit requirements. From here on, design a holistic backup policy for your IT-environment. Also look into modifying your crontab to extend your backup schedule to a weekly and/or monthly basis. Last but not least, perform backups to an encrypted offline storage location.